Michela Coslovich: Your artistic research focuses on the complexity of computer-generated imagery (CGI): how do they relate to contemporary digital culture?

Sheung Yiu: That is a big question and deserves a much more in-depth answer, but I will try to keep it brief. First, let me clarify, when I say CGI, I don’t just mean fancy visual effects in games and movies. I am also referring to a new genre of images created or sustained by a myriad of computational techniques. That includes metadata-embedded smartphone photos, to AI-powered beautifying filters that apply effects on your self-facing camera by default even without you using any filters (in the case of Tiktok earlier this year), to Deepfakes and digital objects created by 3D photogrammetry.

CGI and contemporary digital culture are inexplicably intertwined, and they affect and inform the development of each other. For one, I think the most obvious characteristic of CGI that relates to contemporary digital culture is its networked nature. Images are grouped on social media platforms by albums, stock photography sites by categories, and computer vision datasets by labels and tagging. The network culture not only affects how images are disseminated and how they create meanings. An image is rarely understood alone, especially in digital culture. Instead, it is always understood together with other images, connected through sensor data and human labels, no matter how visually unrelated they may seem on the surface. Images are connected to other images, but not only that, they are connected to a digital ecology consisting of computational models, human users, and machines. A selfie unsuspectingly uploaded on Flickr is scrapped into a training dataset for facial recognition. The algorithm developed is sold to a state agency, which is then used by law enforcement to identify political activists, or by a soldier controlling an aerial drone. The ripple effect of an image is convoluted, and spread across time and space beyond the moment of capture and upload. In fact, I have moved from using the term CGI to ‘algorithmic image system’ to denote the hyper-connectedness of computational images.

Another aspect concerns scales, both in the horizontal and vertical sense. By horizontal scale, I mean the abundance of data and the surplus of online images create what-we-now-call big data. The emergence of big data provides the necessary materials for statistical predictions and pattern recognition. Facebook most famously exploits users’ profile data and their online interactions to predict future behaviors and sell targeted content to unsuspecting individuals — surveillance capitalism. Similar logic is being applied to computer vision, where through analyzing large amounts of images, computer scientists establish a statistical correlation between a particular pixel pattern with a semantic conclusion. Digital culture and visual culture are both experiencing a statistical turn.

By vertical scale, I mean the hyper-connectedness of images and the omnipresence of cameras make it possible to capture the same objects from different distances in different positions with different resolutions. For some popular visual subjects, we have captured so many images and data that now we can observe it from the pictorial level all the way down to the pixel level or even deep layers (deep in the neural network sense of deep learning). The many scales in which we capture and observe the same subject has implications on how much more information we can extract from an image. The Google computer vision project NeRF in the Wild uses a special type of neural network to interpolate 3D structure of famous monuments from all the tourist photos taken from different vantage points. In remote sensing, a mathematical correlation between pixel value and tree size established through ground-level observation helps scientists interpret satellite images of trees taken from space, revealing information that is otherwise lost due to resolution limits. Even though individual trees are invisible in a satellite image in which one pixel represents a 10m x 10m area of land, scientists can recover, or ‘resurrect’, a tree by applying the same statistical model established through comparing ground-level observation with satellite data. In other words, this new way of trans-scalar seeing allows us to almost limitlessly extrapolate and interpolate information encoded on a digital image, converting between human observations and machine calculations and traversing between human scale and planetary scale.

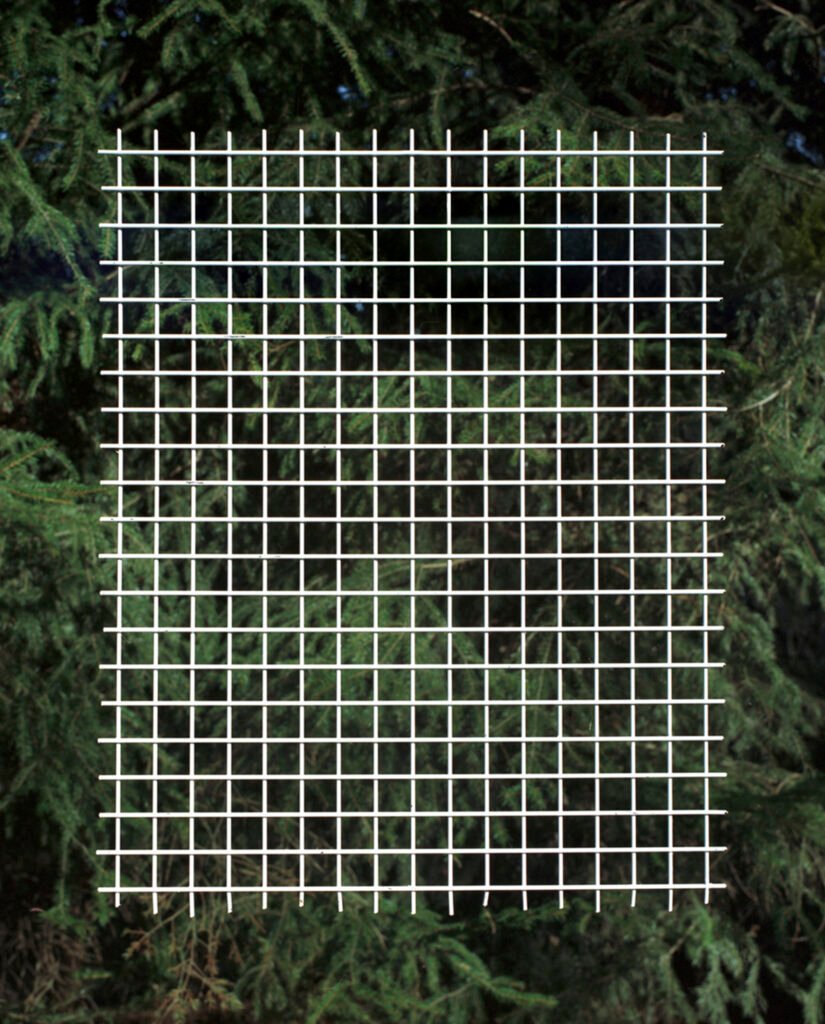

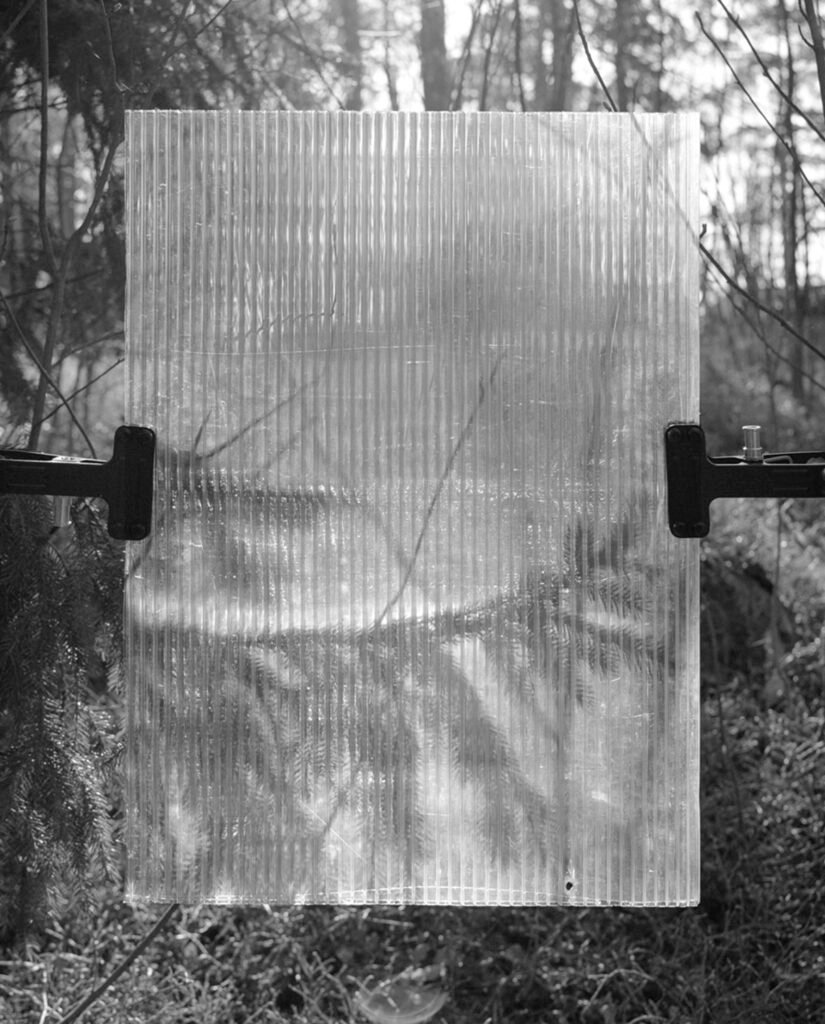

Three Visions, 2019, Photo Triptychs Human vision is increasingly fused with machines and extravisual devices. This idea resonates among the three images: the human hand touching the machines, the circular laser emitter and the researcher’s eye, the sweat on the researcher’s face and the sweat on the laptop screen.

MC: Is there a link between your work and the approach used by the Group/f64 and Ansel Adams’ landscape photography?

SY: My process isn’t necessarily influenced by Group/f64 and I did not think about any pioneering landscape photographer at all. In fact, it was not until I talked with the remote sensing scientists that I started looking back at the love affair between landscape and photography. Landscape photography in that era exemplifies a romantic vision of what nature is and what photography can do. I find the contrast between this romanticism and the scientist’s mathematical approach to forest very interesting. But later on, I also realized the way the canonical photographers rendered landscapes is also not so different from how remote sensing scientists observe the forest. The way a terrestrial laser scanner systematically scans through the whole forest point by point is comparable to the zone system invented by Ansel Adams that renders a landscape into different tonal zones. Remote sensing has forest reflectance models; photography has the zone system.

In my photobook Ground Truth, in addition to referencing the F64 group, I added a small booklet composed of photographs of spreads from a photo guidebook called The Art of Scenic Photography. The book is written by two professional photographers teaching amateurs how to take good landscape photographs. In between chapters, in addition to practical compositional tips, they sporadically express their philosophy about landscape, writing things like light is the alphabet of photography, or the role of an artistic photographer is one of an interpreter. The excerpt serves as a contrast to the way remote sensing scientists think about light and interpretation.

Untitled (lens), 2020, 3D rendering.

Part of a triptych of a virtual pine twig wrapped around a spherical lens with different degrees of subdivision surface modifier applied. The image is inspired by conversations with the scientists and the field trip I took with them. The circular form, which symbolizes a waterdrop, a lens and the sun, is a visual thread that connects the whole project. The circular form signifies a source of light, a viewing portal and optical phenomena such as refraction.

point cloud_particle_tree 12, 2020, 3D rendering.

Digital art using data captured from the terrestrial laser scanning of a tree.

MC: Your project is accompanied by archival imagery. How do they relate to the rest of the work and which are their roles?

SY: I incorporate archival images in Ground Truth because whenever I am working on a project, I have a natural tendency to investigate why an image looks the way it is, why it is made in a certain way, and what the grammar of a specific technique image-making is. For me, what is behind the image is often much more interesting than what is on the pictorial surface because peeking through the surface are often culturally-constructed ways of seeing and deep-rooted philosophy about knowledge encoded in media standards and conventions of representations. In my earlier project, The Twinkling of The Eye, I reviewed seven famous representations of water drops in history, from DaVinci’s to Harold Edgerton’s, from drawing to computer simulation, to show how the same depicted subject reproduced by different imaging technology reveal vastly different understanding about vision, knowledge and power. In my research, archival images become an invaluable resource because they supplement present-day images with a visual history that illuminates where these conventions come from. I use archival images to contextualize images created by obscure computational techniques or advanced apparatus in the long history of humans making sense of the world through image-making. I do this because, firstly, I want to bring these visual spectacles back to the ground, to show that remote sensing, which may sound exotic and futuristic, has an analog past. And secondly, I want to show remote sensing as a paradigm is a result of history and conventions, and that this specific way of rendering the world is historically contingent.

MC: What’s in your future?

SY: I just finished a photobook, Ground Truth, last autumn and a solo exhibition in Titanik Gallery in Turku, Finland, and MAAtila in Helsinki this spring. The project is exhibiting at the Circulation(s) Festival in Paris.

Other than that, I am currently producing a solo exhibition that will open in August in Finland with my creative partner Pekko Vasantola on COCO, a state-of-the-art dataset for training object recognition, among other computer vision tasks.

After this project, I am continuing my exploration in CGI with a project on 3D computer graphics and rendering algorithms, now tentatively called Everything Is a Projection. During the pandemic, I learned 3D-modeling and converted everything on my work desk into virtual objects. I am experimenting with different ways of translating them back into images and objects, and I am again looking into the language of 3D CGI: UV unwrapping, ray tracing, photogrammetry, texture maps, etc. Right now, I am fascinated by scratches on glass and fingerprints on clean surfaces. The project will materialize in many forms, one of which is a photobook, which I am already working on with graphic designer Emery Norton.

Sheung Yiu (HK/FI) is a Hong-Kong-born, image-centered artist and researcher, based in Helsinki. His artwork explores the act of seeing through algorithmic image systems and sense-making through networks of images. His research interests concern the increasing complexity of algorithmic image systems in contemporary digital culture. He looks at photography through the lens of new media, scales, and network thinking; He ponders how the posthuman cyborg vision and the technology that produces it transform ways of seeing and knowledge-making. Adopting multi-disciplinary collaboration as a mode of research, his works examine the poetics and politics of algorithmic image systems, such as computer vision, computer graphics, and remote sensing, to understand how to see something where there is nothing, how to digitize light, and how vision becomes predictions. His work takes the form of photography, videos, photo-objects, exhibition installations, and bookmaking.